Exploring ksqlDB in Docker, headless ksqlDB and ksqlDB in Kubernetes

ksqlDB is built on top of Kafka Streams, a lightweight, powerful Java library for enriching, transforming, and …

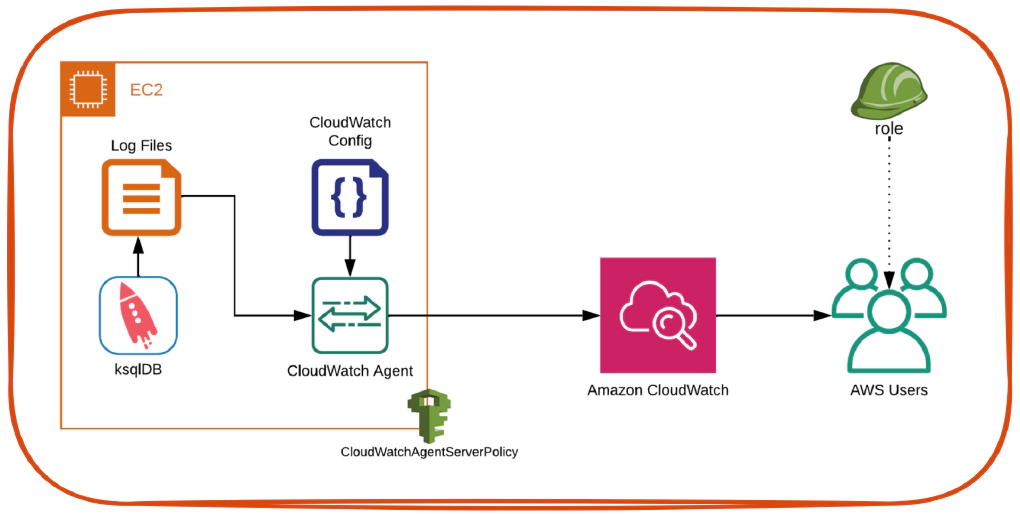

In this article we will see how to send log files generated by an application running in EC2 to CloudWatch using CloudWatch Agent. The application log used for this demo is ksqlDB logs in an EC2 instance.

Step 1: Create and configure EC2 Instance (t2 small) required to install confluent platform.

Step 2: Create .ppk from your .pem file. See here for details.

Step 3: Login into the EC2 instance using putty and ppk with the command ec2-user@<instance-name>

Create user in EC2 instance

Step 1: Create a user

sudo useradd -m <user-name>

Step 2: Set password for the user

sudo passwd <user-name>

Step 3: Enable password authentication by setting PasswordAuthentication to yes in sshd_config

sudo nano /etc/ssh/sshd_config

PasswordAuthentication yes

Step 4: Restart ssh service

sudo systemctl restart sshd

Install OpenSSH and Generate RSA Key in your local machine

Step 1: Install OpenSSH server

sudo apt update

sudo apt upgrade

sudo apt install openssh-server

Step 2: Generate RSA key pair

ssh-keygen

Step 3: Copy Public SSH Key to target machine

On machines with ssh-copy-id, run

ssh-copy-id <user-name>@entechlog-vm-04

On machines without ssh-copy-id, run

cat ~/.ssh/id_rsa.pub | ssh <user-name>@entechlog-vm-04 "mkdir -p ~/.ssh && cat >> ~/.ssh/authorized_keys"

Attach policy CloudWatchAgentServerPolicy to the role attached to EC2 instance

You should see similar message after attaching the policy to the role

Install CloudWatch agent by running the command

sudo yum install amazon-cloudwatch-agent

sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -m ec2 -a status

{

"status": "stopped",

"starttime": "",

"version": ""

}

Configure CloudWatch collect_list by editing the file sudo nano /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.json. Here

run_as_user is the user who have access to log filescollect_list is the list of log filesfile_path is the log location in EC2 instancelog_group_name is the log group name in CloudWatch, log_group_name name should be updated for each use caselog_stream_name is the destination log stream in CloudWatch{

"agent": {

"run_as_user": "root",

"metrics_collection_interval": 10,

"logfile": "/opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log"

},

"logs": {

"logs_collected": {

"files": {

"collect_list": [

{

"file_path": "/var/log/confluent/ksql/ksql.log",

"log_group_name": "dev-ksql.log",

"log_stream_name": "{hostname}",

"timezone": "Local"

},

{

"file_path": "/var/log/confluent/ksql/ksql-kafka.log",

"log_group_name": "dev-ksql-kafka.log",

"log_stream_name": "{hostname}",

"timezone": "Local"

},

{

"file_path": "/var/log/confluent/ksql/ksql-streams.log",

"log_group_name": "dev-ksql-streams.log",

"log_stream_name": "{hostname}",

"timezone": "Local"

}

]

}

},

"log_stream_name": "{hostname}",

"force_flush_interval" : 15

}

}

Start the CloudWatch agent by running

sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -m ec2 -a start

If you get the error 2020/09/19 17:51:50 Failed to merge multiple json config files. OR 2020/09/19 17:51:50 Under path : /agent/run_as_user | Error : Different values are specified for run_as_user then edit sudo nano /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.d/default to change run_as_user from cwagent to root

Verify the status of agent by running

sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -m ec2 -a status

{

"status": "running",

"starttime": "2020-09-19T17:29:29+0000",

"version": ""

}

Verify the agent log by running

less /opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log

2020-09-19T17:57:36Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2020-09-19T17:57:37Z I! [logagent] piping log from dev-ksql.log/ip-172-31-31-103.ec2.internal(/var/log/confluent/ksql/ksql.log) to cloudwatchlogs

2020-09-19T17:57:37Z I! [logagent] piping log from dev-ksql-kafka.log/ip-172-31-31-103.ec2.internal(/var/log/confluent/ksql/ksql-kafka.log) to cloudwatchlogs

2020-09-19T17:57:37Z I! [logagent] piping log from dev-ksql-streams.log/ip-172-31-31-103.ec2.internal(/var/log/confluent/ksql/ksql-streams.log) to cloudwatchlogs

In AWS console, Navigate to CloudWatch –> CloudWatch Logs –> Log Groups, Here we should see a new group for ksql logs

Click the Log Group to view the Log Streams in them, You should see multiple hostname if the ksqlDB cluster has multiple nodes

Click the hostname to view the logs

Here you can also search for a specific key word in the logs

Hope you found this blog useful to stream a log file from EC2 instance to CloudWatch Log.

ksqlDB is built on top of Kafka Streams, a lightweight, powerful Java library for enriching, transforming, and …

In this article we will see how to install Confluent Kafka using Ansible and to monitor the metrics using Prometheus and …