No-Code Data Replication from RDS to S3 and Snowflake

Overview There are multiple ways to replicate data from an Amazon RDS instance to your preferred analytics platform. An …

Orchestration in the context of IT and software development refers to the automated coordination, management, and execution of complex workflows and processes across various systems and applications. It’s a critical component in ensuring that tasks are executed in the correct sequence, at the right time, and with the necessary resources.

Orchestration is not a new concept. In my experience, having worked with various orchestration tools like CA7, Autosys, Control-M, and Airflow, I’ve observed how these tools have evolved to meet the changing needs of the industry. Each of these tools has its strengths, whether in managing batch jobs in mainframe environments or orchestrating complex data pipelines in the cloud.

A common but more basic form of orchestration is cron, which is widely used for scheduling jobs on Unix-like systems. While cron is simple and effective for straightforward tasks, it lacks the advanced features needed to manage complex workflows across distributed systems. This is where more sophisticated orchestration tools like Prefect come into play, offering enhanced flexibility, error handling, and scalability.

Prefect is a modern orchestration tool that builds on the lessons learned from its predecessors. Unlike traditional orchestrators that often rely on rigid schedules and fixed dependencies, Prefect offers greater flexibility, making it easier to handle dynamic workflows and real-time data processing. It’s designed to address the challenges of modern data workflows, where the scale, complexity, and need for reliability are constantly increasing.

In this blog, I’ll share my journey with Prefect, exploring its components, showcasing its capabilities, and providing a hands-on example to demonstrate why it stands out in the world of orchestration tools. Whether you’re new to orchestration or considering Prefect as an alternative to other tools, this guide will offer valuable insights into how it can streamline your workflows and improve operational efficiency.

The code used in this article can be found here.

In this section, we’ll explore the fundamental components of Prefect, including Workspaces, Blocks, Work Pools, Flows, Tasks, and Deployments. Understanding these building blocks is essential for effectively orchestrating workflows in Prefect, whether you’re working in a local environment or across cloud infrastructures.

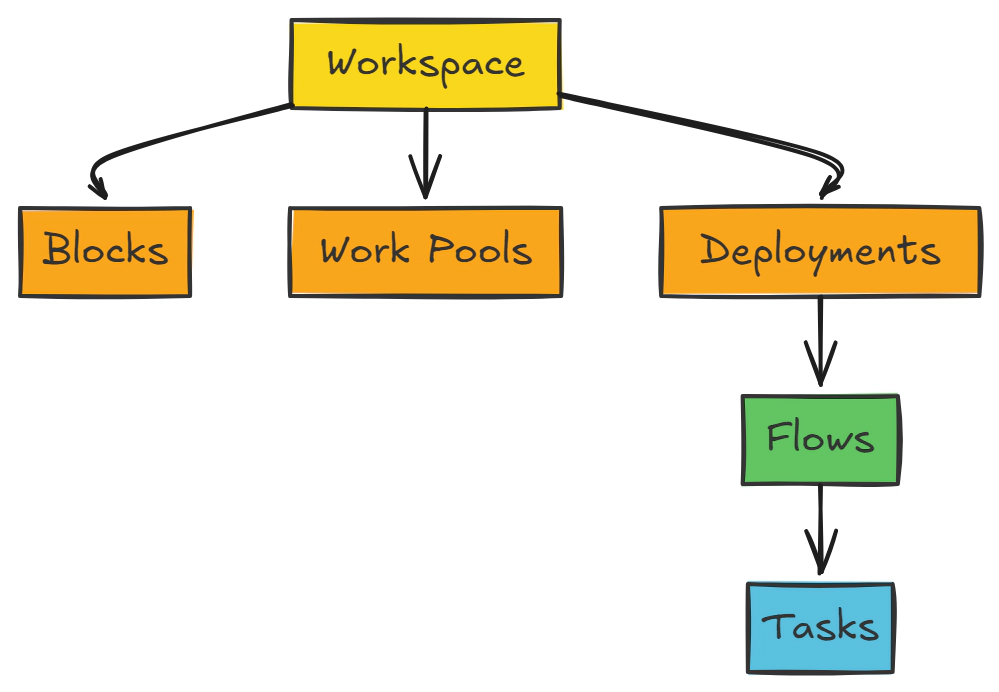

A Workspace is the central hub where all your Prefect activities are organized. It acts as a container for your flows, deployments, and configurations. Within a workspace, you can monitor, manage, and collaborate on various data workflows.

A good practice is to create one workspace per environment (e.g., development, staging, production) or even one workspace per environment per logical group, such as by department (e.g., finance, marketing). This approach helps in isolating permissions and ensuring that only the relevant teams have access to their specific workflows and configurations, enhancing security and organizational clarity.

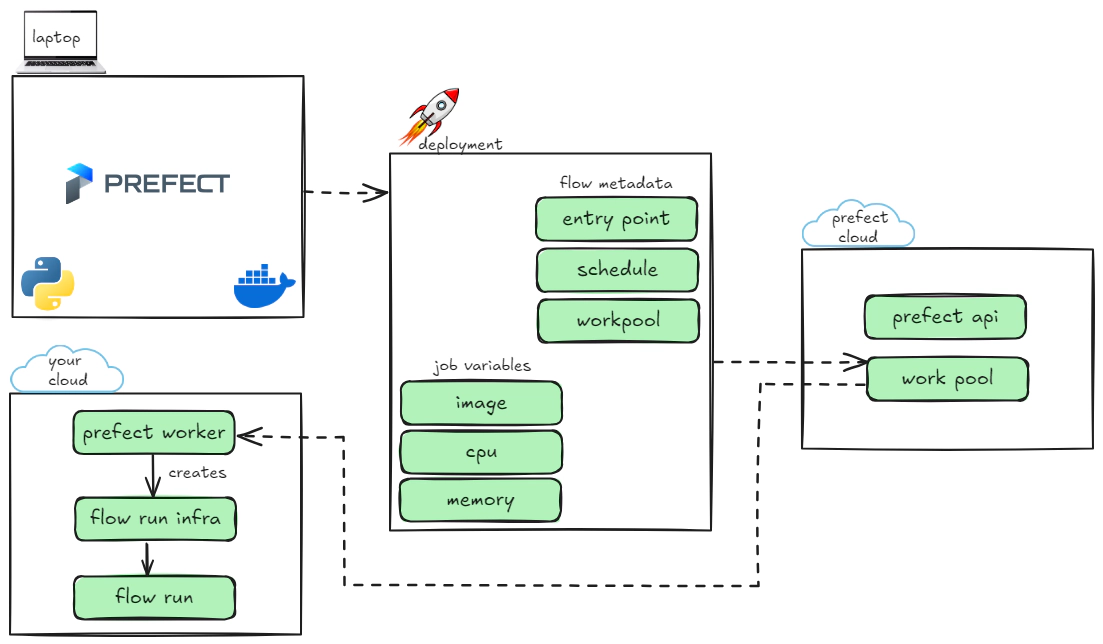

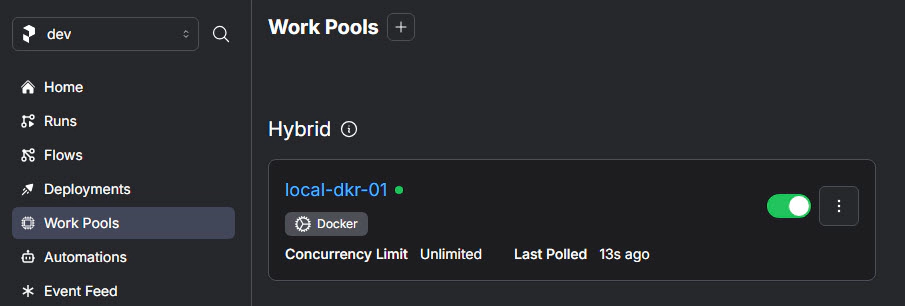

A Work Pool is a collection of infrastructure resources (like agents or servers) where your flows can run. Work Pools help you manage the execution environment, allowing you to specify where and how your flows are deployed and executed.

Note: A Work Pool can be your local Docker environment or a cloud-based setup in AWS (like ECS, EKS), GCP, or Azure. This flexibility lets you choose the most suitable environment for your workflows, whether on-premises or in the cloud.

Each Work Pool is tied to a specific Workspace, and a Workspace can have one or more Work Pools. This setup allows you to organize and manage different execution environments within the same Workspace, providing flexibility and control over where your flows are executed.

Blocks are reusable configurations that help you manage external resources and connections. Blocks can be anything from cloud storage credentials to database connections, and they are referenced within flows and tasks to simplify configuration management.

A Flow is a collection of tasks that are executed in a specific order to achieve a desired outcome. Flows define the logic and sequence of operations, and they can be triggered manually or automatically based on schedules.

Tasks are the individual units of work within a flow. Each task performs a specific operation, such as reading data from a database, processing data, or sending an email. Tasks can be reused across multiple flows.

A Deployment in Prefect is a way to package, schedule, and manage your flows. Deployments define how and when your flows should be executed. This includes specifying the flow, its parameters, schedules, and the Work Pool where it should run. Deployments help ensure that your flows are executed consistently and reliably according to your desired schedule or trigger.

Note: A Deployment can be tied to a specific Work Pool, allowing you to control where your flow runs. This flexibility ensures that your workflows can be executed in the right environment, whether that’s on-premises, in the cloud, or across multiple environments.

In this section, we’ll walk through creating the necessary components for a Prefect workflow using Terraform. This hands-on example will guide you through setting up workspaces, work pools, blocks, and deploying flows, all within a Terraform-managed environment.

Before we get started, make sure you have the following setups ready:

Docker: Essential for creating an isolated environment that’s consistent across all platforms. If you haven’t installed Docker yet, please follow the official installation instructions.

Terraform: We will be creating the required Prefect and Docker resources using Terraform. It’s beneficial to have a basic understanding of Terraform’s concepts and syntax to follow the deployment process effectively.

Prefect Account: You’ll need to create a Prefect Cloud account to interact with Prefect’s cloud services. If you haven’t already signed up, you can do so by following these steps to sign up for Prefect Cloud. Having a Prefect Cloud account allows you to manage workspaces, flows, and deployments in a centralized manner.

Ensure these prerequisites are in place to smoothly proceed with the upcoming sections of our guide.

To allow Terraform to interact with Prefect Cloud for deploying and managing resources, you’ll need to create an API key in your Prefect workspace:

This API key is crucial for configuring Terraform to interact with Prefect Cloud, enabling the deployment and management of your Prefect resources.

For the purpose of the demo we will use a docker container called orchestration-tools which has Terraform and tools required for the demo. If you already have a machine with Terraform, AWS CLI then you can skip this step.

Clone orchestration-examples repo

git clone https://github.com/entechlog/orchestration-examples.git

cd into orchestration-tools directory and create a copy of .env.template as .env. For the purpose of demo, we don’t have to edit any variables

cd orchestration-tools

Start the container

docker-compose -f docker-compose.yml up -d --build

Validate the containers by running

docker ps

SSH into the container

docker exec -it orchestration-tools /bin/bash

Validate terraform version by running below command

terraform --version

In this section, you’ll set up the necessary resources for the Prefect demo using Terraform. Follow these steps to get everything up and running:

Change your directory to orchestration-examples/prefect-demo-02/terraform.

cd orchestration-examples/prefect-demo-02/terraform

Create a copy of the terraform.tfvars.template file and name it terraform.tfvars. Update the terraform.tfvars file with your Prefect account and API key. There are also variables for AWS security groups and Snowflake blocks, which can be ignored if you are not planning to test those aspects.

cp terraform.tfvars.template terraform.tfvars

# Edit terraform.tfvars to add your specific values

Apply the Terraform template to create the required resources:

# Install the custom modules:

terraform init -upgrade

# Format the code:

terraform fmt -recursive

# Plan to review the summary of changes:

terraform plan

# Apply the changes to your target environment:

terraform apply

Once the terraform apply command finishes, it will create a Workspace, Work Pool, and a Block within your Prefect environment. These resources will be ready to use for creating and managing your Prefect flows and deployments.

In this section, we’ll walk you through the process of creating and deploying your first Prefect flow. Prefect flows are the core building blocks of your orchestration pipeline, allowing you to define and manage the execution of tasks in a structured and scalable way.

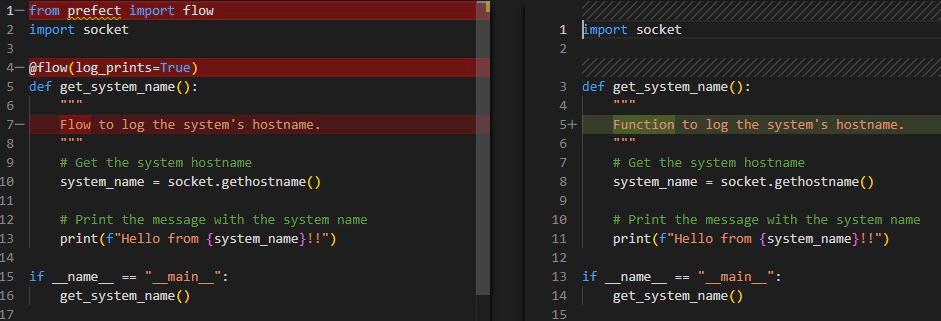

We’ll start with a simple example: a flow that retrieves and logs the hostname of the system it’s running on. This example will help you get familiar with the basic structure of a Prefect flow.

Let’s start with our basic python code

import socket

def get_system_name():

"""

Function to log the system's hostname.

"""

# Get the system hostname

system_name = socket.gethostname()

# Print the message with the system name

print(f"Hello from {system_name}!!")

if __name__ == "__main__":

get_system_name()

Navigate to /orchestration-examples/prefect-demo-02/python/flows. Run the Python code by executing

python get_system_name_basic.py

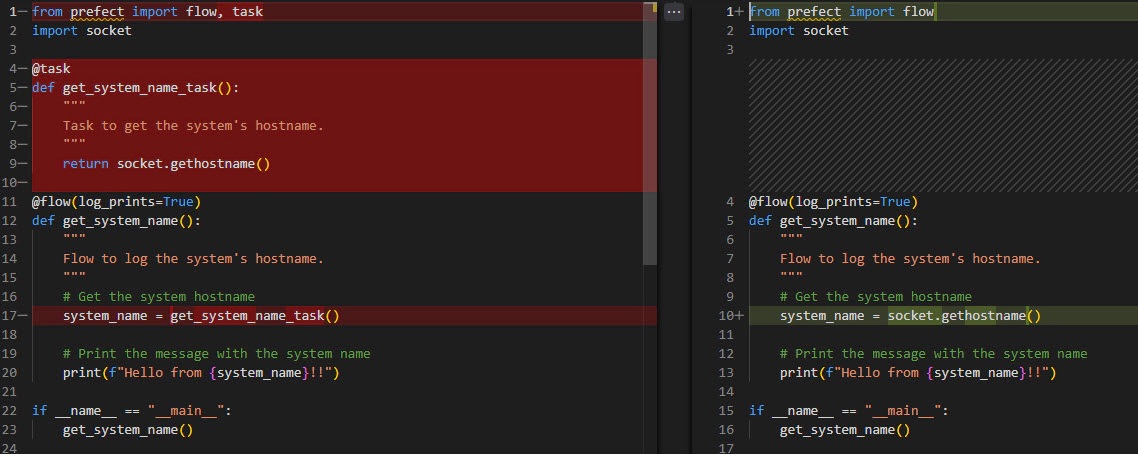

Convert the basic code to a Prefect flow using minimal changes by adding the necessary Prefect import (from prefect import flow) and decorating the main function with @flow to define it as a Prefect flow

Login into prefect cloud by running below command

prefect cloud login -k YOUR_API_KEY

Run the Prefect flow by executing

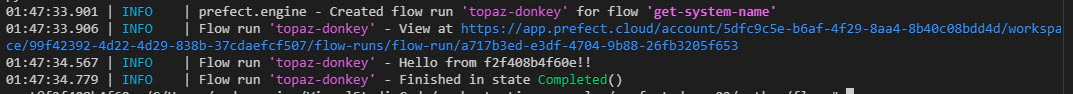

python get_system_name_flow.py

We’ll enhance our basic flow by converting the logic into tasks. Run the updated flow using

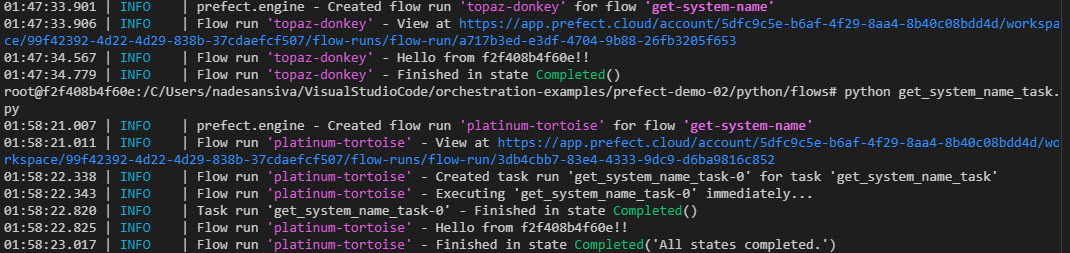

python get_system_name_task.py

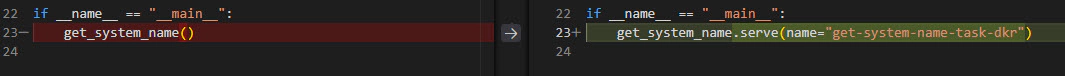

Convert your task-based flow to a deployment by simply adding .serve(name=“get-system-name-task-dkr”) at the end of the flow call in your script. This will deploy the flow with the specified name and serve it.

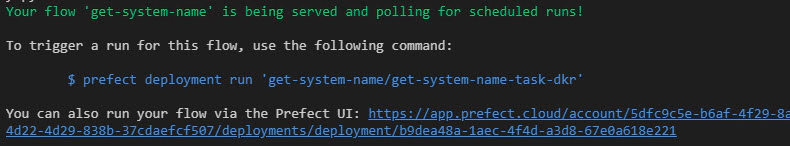

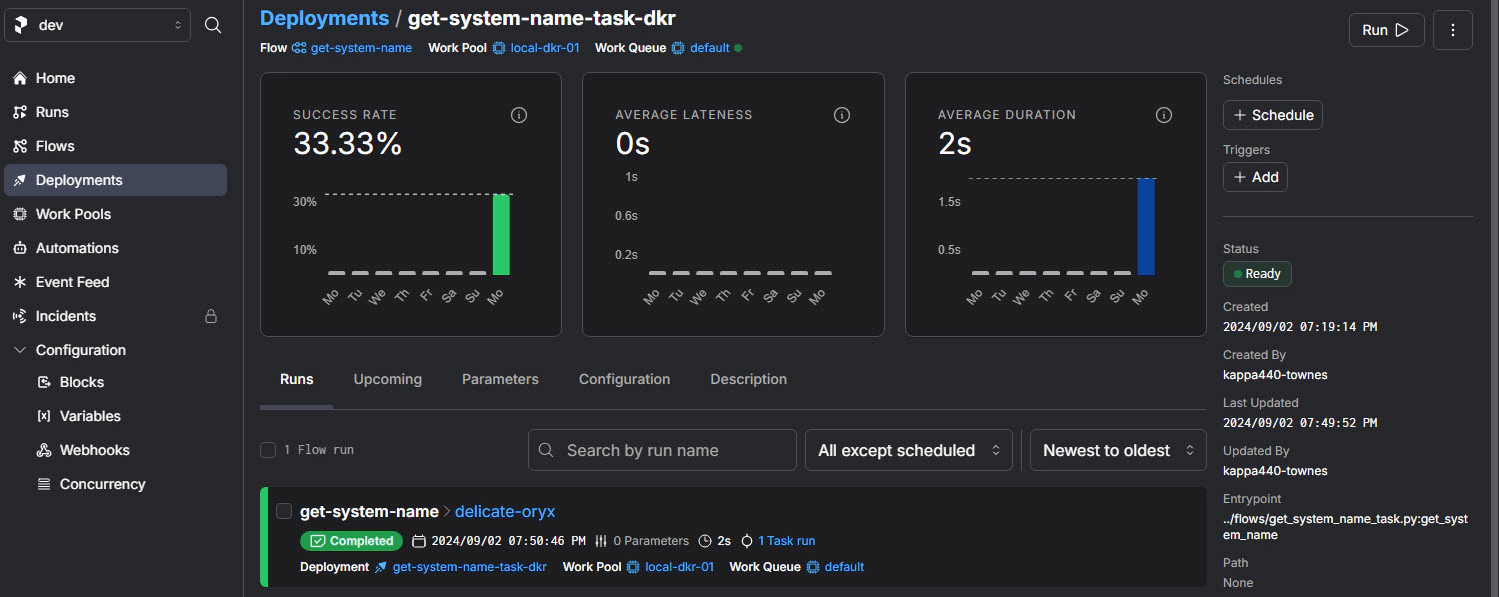

Create the deployment by running the command

python get_system_name_deployment.py

While creating a deployment using serve in your Python code is straightforward and effective, a better way to manage deployments is by using a deployment YAML file. This approach provides a more structured and scalable method for managing configurations.

Deployment also requires a Docker image, which can be created by running the command ./build_docker_image_dkr.sh in the /orchestration-examples/prefect-demo-02/python directory.

name: demo

prefect-version: 2.19.7

pull:

- prefect.deployments.steps.set_working_directory:

id: clone-step

directory: /app/deployments/

- prefect.deployments.steps.pip_install_requirements:

directory: "/app/flows"

requirements_file: requirements.txt

stream_output: True

deployments:

- name: get-system-name-flow-dkr

entrypoint: ../flows/get_system_name_flow.py:get_system_name

parameters: {}

work_pool:

name: local-dkr-01

work_queue_name: default

job_variables:

image: 'prefect-local-python:latest'

image_pull_policy: "Never"

schedules: []

Navigate to /orchestration-examples/prefect-demo-02/python/deployments and deploy your flow using the command prefect --no-prompt deploy --all --prefect-file prefect_dkr.yaml. This method is recommended for managing deployments in a more organized way. You can also add your schedule information to the deployment.

In this blog, we’ve explored how Prefect makes workflow orchestration straightforward and powerful. We’ve set up and deployed flows using the free tier of Prefect Cloud, but it’s worth noting that Prefect is open-source and can be self-hosted if you prefer.

Prefect’s flexibility, whether in the cloud or self-hosted, makes it a great tool for managing complex workflows with ease. Whether you’re automating tasks or managing data pipelines, Prefect is designed to help you streamline your operations.

We covered some basic examples in this blog, but more examples are available in the demo repository, including examples for running dbt models using Prefect.

Hope this was helpful. Did I miss something ? Let me know in the comments.

This blog represents my own viewpoints and not those of my employer. All product names, logos, and brands are the property of their respective owners.

Overview There are multiple ways to replicate data from an Amazon RDS instance to your preferred analytics platform. An …

My journey into smart home automation began with Samsung SmartThings, which worked well initially. However, as Samsung …